Large Language Models Basic

1. Large Language Models Basic#

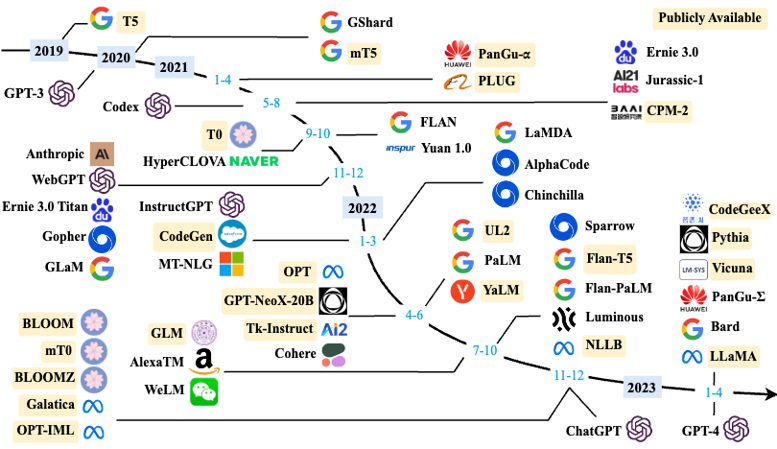

In these sections, we will explore the attention mechanism, which allows models to focus on specific parts of the input during processing. We will study the Transformer model architecture, which serves as the cornerstone for many state-of-the-art language models, and how it has fundamentally transformed the field of Natural Language Processing (NLP). Additionally, we will introduce generative pre-trained language models like GPT, delve into the network structures of large language models, optimization techniques for attention mechanisms, and practical applications stemming from these foundations.